The vertex data and vertex shader act the same as they do without tessellation, except they operate on the control points of the mesh, rather than on vertices. The tessellated pipeline looks like: Vertex Shader > Hull Shader > Tessellation > Domain Shader > Geometry Shader > Rasterization > Fragment Shader. D3D12ĭ3D12 models tessellation as two additional stages inside the existing graphics pipeline. This, coupled with the other benefits of tessellation (memory savings and frame-by-frame flexibility) shows that tessellation is worth pursuing. This is reassuring it shows that, even on a non-TBDR renderer, tessellation is no slower and sometimes faster than pretessellated models. The Nvidia GPU shows that tessellation is 8% faster. (Rather, the performance delta is within the noise). The Intel GPU shows no performance change. The test runs on Windows, so I can’t test a TBDR renderer, but I can at least get some data.

Then, the benchmark (the “PerformanceTest” target in the linked project) draws this same tessellated mesh (but without the geometry shader), and compares that to drawing the pre-tessellated mesh, which it has read off-disk. One target (the “Tessellation” target in the linked project) draws a triangle at maximum (64) tessellation, and uses the geometry shader to write out the locations of all the interpolated vertices to a UAV, which gets saved to disk. Unfortunately, Metal doesn’t seem to allow for reading back the tessellated mesh, so I wrote this benchmark using Direct3D 12. I’m not trying to compare the performance of any computation performed in any shader, so the shaders involved do essentially nothing. The goal is to compare the performance of a tessellated mesh against a non-tessellated, but identical mesh. I wanted to understand the performance claims, so I wrote a benchmark Tessellation.zip to measure it (it’s the “PerformanceTest” target in the linked project). Given the general direction of WebGPU to not include a real geometry shader, this can get us halfway there. It can consult with the vertices for the entire triangle, rather than each vertex being independent like in a vertex shader (but it can’t generate additional geometry). If the tessellation factors are all 1, the control points and the vertices are identical, which means that the domain shader effectively acts as a poor-man’s geometry shader.This kind of flexibility is impossible without tessellation Rather than having a fixed number of LODs for your mesh, and snapping between them, tessellation allows for fluidly changing the density of the mesh per-frame.Memory usage is decreased because the high-resolution model is never stored in memory.Because the number of control points is smaller than the number of vertices, this decreases the total amount of work the GPU must perform (and therefore increases performance)

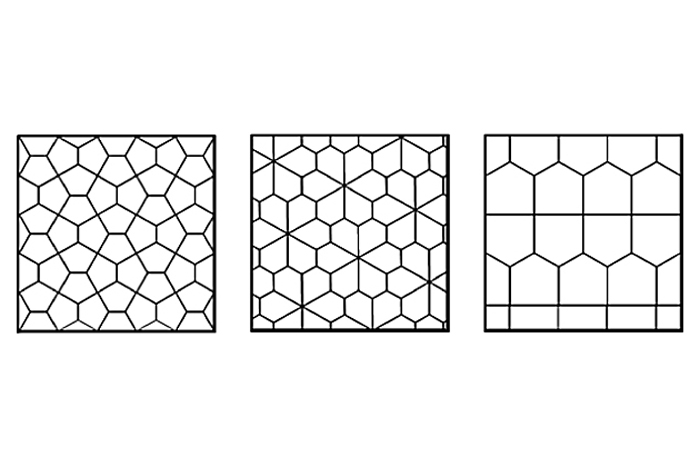

White grey tessellation triangle Patch#

Skinning and morphing can be done on the patch control points, rather than the vertex data itself.Because the number of patches is almost always smaller than the number of generated triangles, this decreases the amount of memory bandwidth needed to render the mesh (and therefore increases performance) The pipeline reads patch data from memory, rather than triangle data.There are a few different pieces of motivation here, grouped into categories of 1) performance 2) memory usage and 3) new rendering possibilities that weren't possible before Each draw can have entirely independent parameters for this conversion, which means the density of triangles can change fluidly from one frame to the next. Rather than the artist baking this mesh into a collection of triangles at authoring time, the GPU has facilities to convert this mesh into triangles at draw-call time. Rather than representing a mesh as a collection of triangles, tessellation represents a mesh as a collection of “patches,” where a patch represents a smooth, curved, mathematical surface (e.g. Tessellation is a way of combatting this problem.

When the object appears larger on the screen, it demands a higher density of triangles in order to maintain visual fidelity. However, in 3D graphics, it’s common for objects to move closer and farther from the camera, meaning that their screen-space size can change dramatically. Traditional non-tessellated 3D rendering works well when the ratio of mesh density to screen-space size is roughly constant.

0 kommentar(er)

0 kommentar(er)